High quality implementation of educational approaches can have a significant impact on improving students' outcomes. Implementation is generally defined as a specified set of planned and intentional activities designed to integrate evidence-based practices into real-world settings (Mitchell, 2011).

Approaches, practices and interventions delivered in real-world school and classroom settings often look different from what was originally intended. Principals and teachers may decide to adapt elements of a program, and barriers in the school system may prevent an approach from being fully realised. What this shows is the importance of the quality of the implementation in affecting learning gains, rather than the program itself. Implementation strategies such as training and ongoing teacher support are important to consider in efforts to encourage positive student outcomes.

The application of implementation science in education is slowly emerging (Albers & Pattuwage, 2017). It offers many different concepts to use in education. This article explores several publications that open the discipline of implementation to educators. This includes a recent synthesis of the literature on Implementation in Education (Albers & Pattuwage, 2017), the Implementation Framework Getting to Outcomes (GTO) (Wandersman, Imm, Chinman, & Kaftarian, 2000), Deliverology (Barber, Kihn, & Moffit, 2011) and the Impact Evaluation Cycle (Evidence for Learning, 2017).

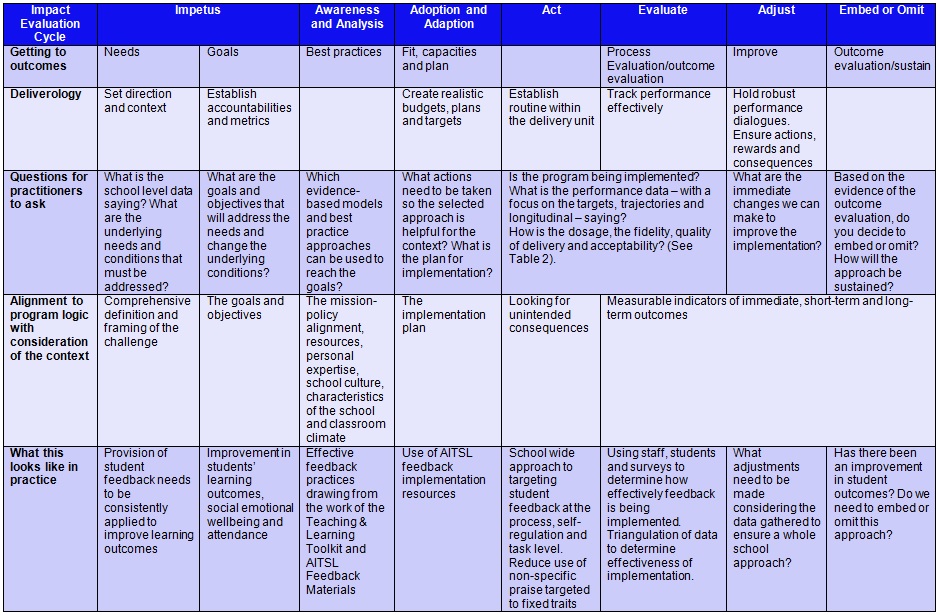

The differences and similarities of GTO, Deliverology and the Impact Evaluation Cycle are explored in Table 1. This presents a synthesised framework for educators to explore the implementation of their own approaches, with practical questions for the educators to ask at different steps in planning and implementation. In the bottom row in Table 1, the feedback implementation example walks through these frameworks and what they look like in practice.

Table 1: Implementation models in education

(Adapted from: Australian Institute for Teaching and School Leadership, 2014; Barber et al., 2011; Domitrovich et al., 2008; Education Endowment Foundation, 2017; Evidence for Learning, 2017; Wandersman et al., 2000)

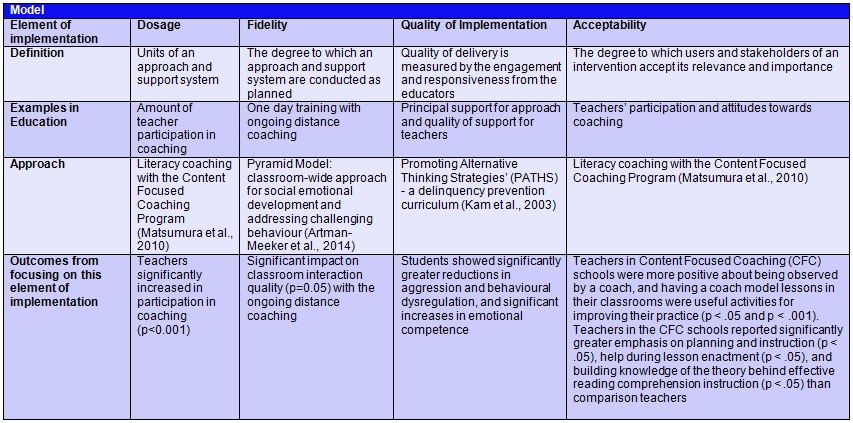

Four major indicators of implementation quality are dosage, fidelity, quality of delivery (Domitrovich et al., 2008) and acceptability (Albers & Pattuwage, 2017). These are outlined in Table 2.

A recent synthesis of literature on implementation in education highlights how these indicators can be defined. The report includes several randomised controlled trials and documents how dosage (participation in certain activity), fidelity (ongoing support), quality of implementation (support from principals) and acceptability (teachers' participation and attitudes to that activity) influence students' academic and behavioural outcomes, and teachers' attitudes and practices.

When implementing an educational approach, providing ongoing support to teachers through coaching, workshops, and supervision has been shown to have a substantial impact on student outcomes (Artman-Meeker, Hemmeter, & Snyder, 2014; Clarke, Bunting, & Barry, 2014; Gray, Contento, & Koch, 2015; Kam, Greenberg, & Walls, 2003; Matsumura, Garnier, & Resnick, 2010; Sarama, Clements, Starkey, Klein, & Wakeley, 2008).

Like observed in these studies, how can schools foster ongoing support of teachers' professional development to improve student outcomes? Professional learning communities are an important part of teachers' continuous development (Australian Institute for Teaching and School Leadership, 2017). They are well known from high performing education systems such as Canada (Jensen, Sonnermann, Roberts-Hull, & Hunter, 2016). The Carnegie Foundation's concept of Networked Improvement Communities (NICs) specifies how these can work. An NIC should be:

- focused on a well-specified common aim;

- guided by a deep understanding of the problem, the system that produces it, and a shared working theory to improve it;

- disciplined by the methods of improvement research to develop, test, and refine interventions; and,

- organised to accelerate interventions into the field and to effectively integrate them into varied educational contexts. (Carnegie Foundation, 2017)

This thinking is also reflected in Professor John Hattie's recent book chapter Time for a reboot: Shifting away from distractions to improve Australia's schools. In it Hattie highlights:

‘We need excellent diagnosis identifying strengths and opportunities to improve, then focus on understanding what has led us to the situation, and being clear on where we therefore need to go. We need gentle pressure, relentlessly pursued toward transparent and defensible targets, esteeming the expertise of educators who make these difference, while building a profession based on this expertise…an education implementation model that is shared between schools and not resident in only a few.' (Hattie, 2017)

Table 2: A framework for measurement within implementation

(Adapted from: Albers & Pattuwage, 2017; Artman-Meeker et al., 2014; Domitrovich et al., 2008; Kam et al., 2003; Matsumura et al., 2010)

References

Albers, B., & Pattuwage, L. (2017). Implementation in Education: Findings from a Scoping Review. Melbourne: Evidence for Learning. Retrieved from http://www.evidenceforlearning.org.au/evidence-informed-educators/implementation-in-education

Artman-Meeker, K., Hemmeter, M. L., & Snyder, P. (2014). Effects of Distance Coaching on Teachers' Use of Pyramid Model Practices: A Pilot Study. Infants & Young Children, 27(4), 325-344.

Australian Institute for Teaching and School Leadership. (2014). Feedback. Retrieved from https://www.aitsl.edu.au/feedback/

Australian Institute for Teaching and School Leadership. (2017). Professional Learning Communities. Retrieved from https://www.aitsl.edu.au/docs/default-source/feedback/documents/aitsl-feedback-professional-learning-communities-strategy.pdf?sfvrsn=6

Barber, M., Kihn, P., & Moffit, A. (2011). Deliverology: From idea to implementation. McKinsey on Government, 6.

Carnegie Foundation. (2017). Using Improvement Science to Accelerate Learning and Address Problems of Practice. Retrieved from https://www.carnegiefoundation.org/our-ideas/

Clarke, A. M., Bunting, B., & Barry, M. M. (2014). Evaluating the implementation of a school-based emotional well-being programme: a cluster randomized controlled trial of Zippy's Friends for children in disadvantaged primary schools. Health education research, 29(5), 786-798.

Domitrovich, C. E., Bradshaw, C. P., Poduska, J. M., Hoagwood, K., Buckley, J. A., Olin, S., . . . Ialongo, N. S. (2008). Maximizing the implementation quality of evidence-based preventive interventions in schools: A conceptual framework. Advances in School Mental Health Promotion, 1(3), 6-28.

Education Endowment Foundation. (2017). Evidence for Learning Teaching & Learning Toolkit: Education Endownment Foundation. Retrieved from http://evidenceforlearning.org.au/the-toolkit/

Evidence for Learning. (2017). Impact Evaluation Cycle. Retrieved from http://evidenceforlearning.org.au/evidence-informed-educators/impact-evaluation-cycle/

Gray, H. L., Contento, I. R., & Koch, P. A. (2015). Linking implementation process to intervention outcomes in a middle school obesity prevention curriculum,‘Choice, Control and Change'. Health education research, 30(2), 248-261.

Hattie, J. (2017). Time for a reboot: Shifting away from distractions to improve Australia's schools. In T. Bentley & G. C. Savage (Eds.), Educating Australia: Challenges for the decade ahead. Carlton: Melbourne Univ. Publishing.

Jensen, B., Sonnermann, J., Roberts-Hull, K., & Hunter, A. (2016). Beyond PD: Teacher Professional Learning in High-Performing Systems, Australian Edition. Retrieved from Washington, DC:

Kam, C.-M., Greenberg, M. T., & Walls, C. T. (2003). Examining the role of implementation quality in school-based prevention using the PATHS curriculum. Prevention Science, 4(1), 55-63.

Matsumura, L. C., Garnier, H. E., & Resnick, L. B. (2010). Implementing Literacy Coaching The Role of School Social Resources. Educational Evaluation and Policy Analysis, 32(2), 249-272.

Mitchell, P. F. (2011). Evidence-based practice in real-world services for young people with complex needs: New opportunities suggested by recent implementation science. Children and Youth Services Review, 33(2), 207-216.

Sarama, J., Clements, D. H., Starkey, P., Klein, A., & Wakeley, A. (2008). Scaling up the implementation of a pre-kindergarten mathematics curriculum: Teaching for understanding with trajectories and technologies. Journal of Research on Educational Effectiveness, 1(2), 89-119.

Wandersman, A., Imm, P., Chinman, M., & Kaftarian, S. (2000). Getting to outcomes: A results-based approach to accountability. Evaluation and program planning, 23(3), 389-395.

The authors note that: ‘… providing ongoing support to teachers through coaching, workshops, and supervision has been shown to have a substantial impact on student outcomes.’

As a school leader, when implementing an educational approach, how do you ensure teachers receive ongoing PD and supervision? Is this written into your implementation plan?